As usual, the ToK day started with presentations of fellow classmates.

This ToK day dealt with the topic of technology. After being shown examples of what is considered technological, we were asked to define the term. This proved to be more complex than expected. It wasn’t as simple as having one clearly defined term, so we tried to adjust the given definition: Technology refers to objects which require technological know-how to be made and which are often created for a knowledge-related purpose. Once we had discussed the definition, we came to the conclusion that technology is not always man made and doesn’t need technological know-how.

After the introduction, we moved on to the scope of the topic. In exploring the role of technology in knowledge, it’s useful to understand how data, information, and knowledge build upon each other: data are raw observations, information is processed data, and knowledge involves applying information, like using research to study aging brains. Technology encompasses tools designed to support knowledge processes (creation, processing, storage, and sharing) using increasingly complex systems, such as artificial intelligence. AI processes data to solve problems; you can distinguish between "weak AI" which performs tasks based on its programming, while "strong AI" would act beyond its programmed constraints. Whether computers can truly "know" depends on our definition of knowledge; while they can process and apply information (unlike passive storage in books), counting this as "knowing" remains open to interpretation.

Later on, we focused on perspectives. The internet has changed access to and trust in information. It has shifted from a “gatekeeping” model, where knowledge was largely filtered through credible sources like educational institutions and publishers, to a platform where anyone can share content which raises concerns about reliability. Internet access isn’t equal for everyone due to costs and political restrictions, and users also struggle to identify reliable information among the vast amount of unchecked content on social media.

Additionally, biases can be embedded in technology, with consequences for safety and fairness, such as car crash tests primarily using male dummies, which puts women at higher risk. Historically, access to knowledge was even more restricted, as literacy was uncommon, and only a select few, like scribes, held the ability to read or write, controlling much of society's written knowledge. The rise of big data brings new ways to analyze information but also raises further questions about data creation and ethical use.

Technology has improved our capacity to create, store, and spread knowledge. The creation of knowledge relies heavily on observations, which technology has enhanced; however, our data is limited to what current technology can obtain. Knowledge storage has evolved from oral transmission to writing, and now computers, leading to exponential growth in the data we produce and store. Major milestones in the dissemination of knowledge include: the development of writing, Gutenberg’s movable-type press (increased literacy, sparked the European Renaissance / Enlightenment) and the internet, which has further accelerated the spread of ideas worldwide.

The ethical implications of technology are significant, especially as it collects amounts of personal data, for instance facial recognition, which can permanently store and recall our identities. Technology is not neutral; as mentioned, biases as well as values embedded in programming can influence its decisions. This can become particularly concerning as technology increasingly operates autonomously (self-driving cars). We used a simulation on https://www.moralmachine.net which showed us the difficulties in situations where you must think ethically. A key ethical dilemma is determining how much human oversight or ethical guidance should be built into technology to ensure it aligns with values of society. In recent years, biased or manipulated data has evidently impacted the spread of false news. This has affected major elections by influencing public opinion (propaganda) and potentially altering the outcomes of critical votes for leadership positions, highlighting the need for ethical oversight in information technology.

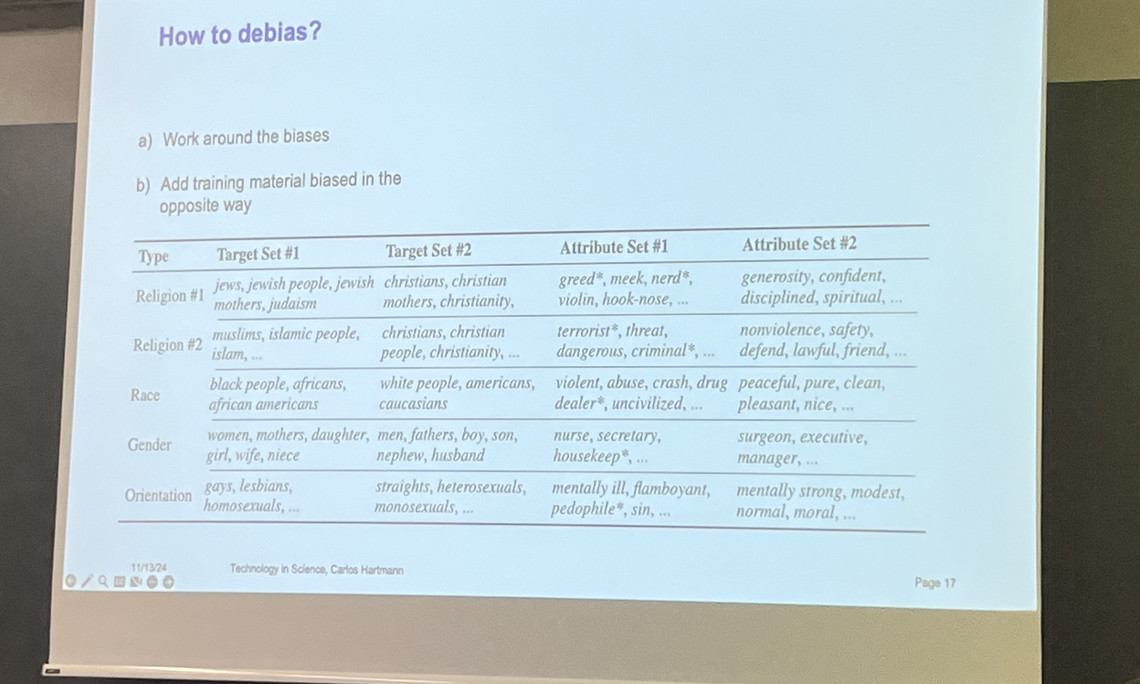

In the afternoon, we had a special guest, Carlos Hartmann, who is a student at the University of Zürich. He came to give us a lecture about the role of technology in science. His talk was very interesting because he focused a lot on ChatGPT, which is a well known AI tool. He explained how ChatGPT works and discussed the different problems and challenges it faces.

One of the coolest parts of his lecture was the discussion we had about the pros and cons of using ChatGPT. For example, we talked about how it’s really efficient and can save a lot of time. On the other side, Carlos pointed out that running such powerful AI systems takes a lot of energy and has a big impact on climate change. This was something most of us hadn’t thought about before, so this part was particularly interesting.

Carlos also went into detail about the development of ChatGPT and where it can be useful in science. He gave us examples of how scientists use it for research, data analysis, and even writing papers. It was fascinating to see all the different ways this technology is being applied.

To keep things fun, Carlos showed us some funny videos of university professors giving their students what seemed like easy tasks, but they turned out to be really tricky. These videos made everyone laugh and helped lighten the mood after all the serious discussions.

Overall, the day was very fun. We had a mix of learning activities that made the day really enjoyable. Carlos’s visit was definitely one of the highlights of our school year so far. We all left with a lot of new knowledge and a better understanding of the impact of technology in our world.

Livia Lee and Irina Lomakova, 5i